By Keith Child and Elena Serifilippi

A good impact evaluation can be incredibly insightful, but as all evaluators know, producing a good evaluation can also be incredibly challenging. Sometimes managers implement mid-course changes that affect the content and timing of a project rollout; intended beneficiaries receive no treatment while control groups inexplicably do receive a treatment (if not from the project, then from somewhere else); not to mention the potential for mass attrition between baseline and endline studies. Add to this mix the hazards of poorly trained enumerators, freak weather, unexpected shocks, and revolving implementation staff and suddenly it becomes clear that impact assessments are not for the faint of heart. Maybe having a Plan B is not such a bad idea after all!

COSA’s research team recently sat down to share their experiences in conducting impact evaluations, focusing on the particular challenges inherent to measuring sustainability impacts. Making use of learning opportunities and insights is part of the COSA culture. Here are four key ideas on how to manage a difficult impact evaluation process:

What to measure?

Setting boundaries for an evaluation is essential but also somewhat counterintuitive when the purpose of the evaluation is to consider sustainability questions. For example, the subject of assessment may be variously defined as (1) a development program, (2) a geographic area ranging from a confined field trial up to a landscape dimension, (3) an intervention strategy, (4) a target group, and so on. Delineating the scale of evaluation is a necessary step that will influence the evaluation question(s), along with theoretical and methodological considerations. Evaluators typically like to focus on individuals and households as the unit of analysis because projects designed to have landscape impacts are too abstract. People and households are tractable and offer a convenient unit of study to infer causal relationships. Of course, in doing so, evaluators run the risk of ‘not being able to see the forest for the trees’. Does focusing the analytical lens so narrowly miss broader landscape-level issues? At COSA we have thought seriously about system-level properties and have developed a landscape methodology that works[1].

When to measure?

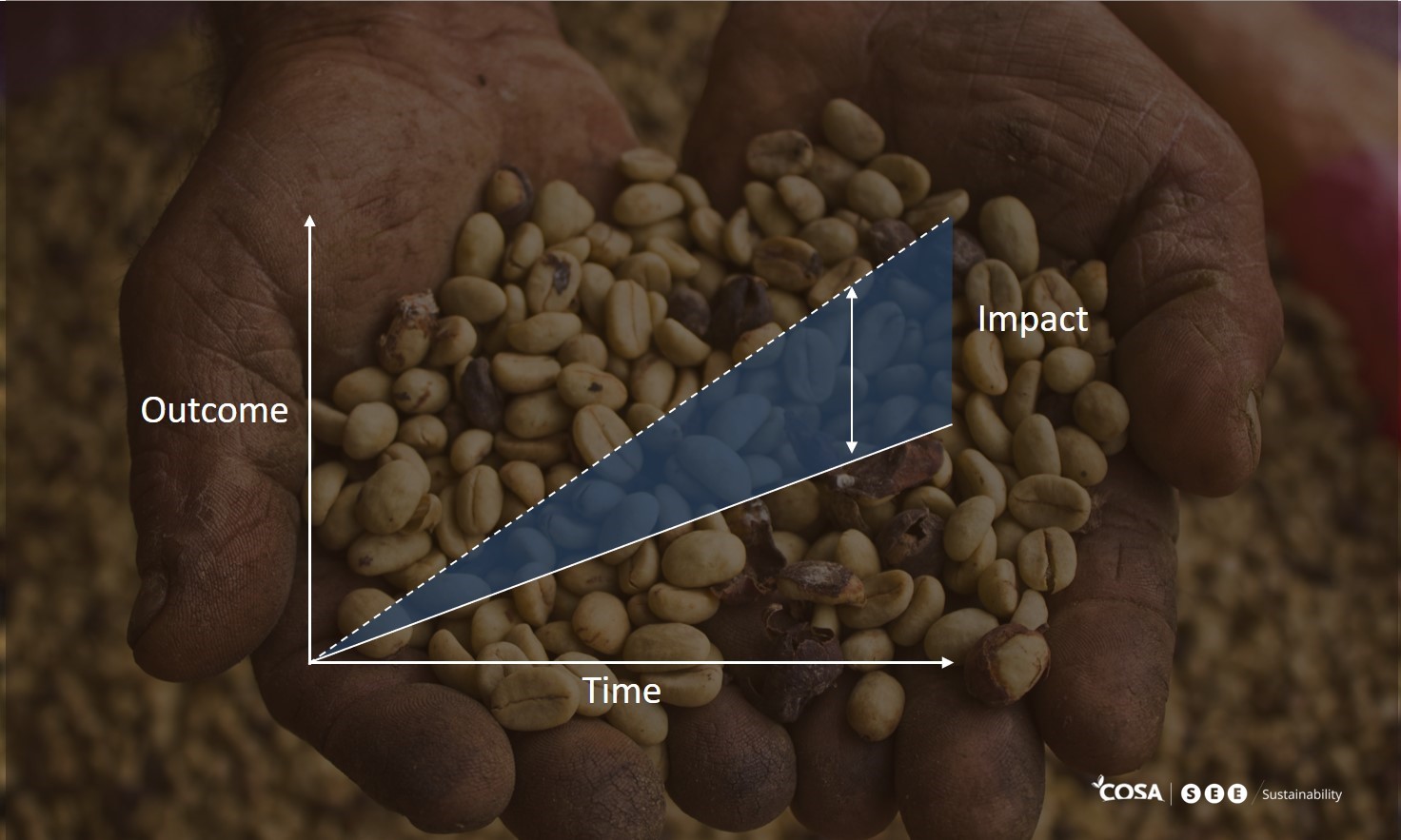

When to evaluate impacts is a difficult question for any development project, but particularly so for ones that target sustainability. Impacts take time to show up in data and one or even a few years may not be long enough. How long should a project manager wait before they expect to see a sustainability impact? Once they identify a change (positive or negative), can they assume that the change will continue in a linear and monotonic rate? Understanding of a project’s ‘impact trajectory’ is essential for any impact assessment, mainly because success rarely resembles a rising straight line on the slope of an XY graph, but is more likely to take the shape of a J-curve or the S-curve of a step function. It stands to reason that if we wrongly assume the shape of the function and where on the function our data is situated, we are likely to draw all the wrong conclusions (e.g., if our endpoint evaluation falls on the bottom of a J-curve function, we will conclude the project is a failure, even though an evaluation at a further point along the curve could support the opposite conclusion). Despite the pressure to show donors impressive results over the short-term, implementers need to push back: sustainability impacts more realistically occur after one or even many decades.

Have a Plan B.

Experimental and quasi-experimental designs are great, but sometimes they just don’t work, or at least, they don’t work as well as we would like. Always have a Plan B! This might consist of running efficiency trials during the technology development stage, conducting field experiments at the beginning of a project to understand farmer preferences, or conducting qualitative interviews with farmers and household members to gain a deep understanding of why they make certain choices over others. If the proverbial bottom falls out of the cart, these and other data collection activities can make it easier to switch to an alternative evaluation design. The secret lies in a mixed method approach[2] that combines both qualitative and quantitative best practices suitable to the characteristics of the project. For example, the overall quantitative survey can incorporate retrospective questions, perception and change of direction questions that will allow for stronger information about the impact of the intervention.

Sometimes a Baseline and Endline is not enough!

Increasingly, ongoing and routine performance monitoring is employed to measure sustainability gains because it offers a fast and more affordable approach to measuring sustainability performance. Regular data collection can be integrated into normal intervention activities at a fraction of the cost it would take to conduct a rigorous impact evaluation and, if done correctly, can provide an almost instantaneous dashboard of indicators that can help to inform iterative learning and adaptive management. While performance monitoring is not a replacement for impact evaluation, it can lead to better sustainability outcomes and contribute to reducing the cost of a more robust impact evaluation through the provision of longitudinal data.

A good evaluator can switch gears in a worst-case scenario, turning a potential disaster into a viable impact study. The trick is to turn every evaluation into a learning opportunity by encouraging innovation and knowing how to avoid the most obvious risks. As the old saying goes, “when life serves you lemons, make lemonade.”

[1] Landscape measurement tools blend big data streams on factors such as deforestation with the explanatory micro data at farm level i.e. low productivity, large families, poverty, etc. to create a more holistic sustainability picture. This ability to see trends and the causal relations facilitates the ability to influence the appropriate levers with policy or investment or targeted interventions.

[2] COSA’s report “Impacts of Certification on Organized Small Coffee Farmers in Kenya” employed a mixed methods evaluation design.